Raw UVC on Android

It was time to integrate support for the Nreal Light's stereo camera into our Android build, but our go-to library (nokhwa) does not work on Android. In fact, nothing seemed to support this specific camera on Android... So it was time to make our own USB Video Device Class implementation (but not really).

Webcam support on Android

As far as I gathered, external camera support for Android was always kind of an afterthought. It was officially integrated in Android P, but support for it is optional (i.e. depends on the manufacturer compiling the firmware).

According to this very detailed stackoverflow answer, it was not very well supported on even Android 11 phones. The official APIs did work on my test Samsung S9+, but it's running Android 13 (LineageOS), so that's not really representative.

Oh, by the way, permission handling has been broken for 4 years now.

What API to use

In native code on Android, you get access to all cameras (including the external ones) through the

official NdkCamera API, which is the C binding of the Camera2 API (which in turn is not recommended

and CameraX should be used in java/kotlin land).

I usually go with the "when in Rome" way and use the official recommendation, but NdkCamera

is needlessly complicated to use. Like, just take a look at their "basic" example. When you see a 500 line Manager class in a "basic" example, you know you're in for some

"fun" coding.

My problems specifically:

- It is callback-based, resulting in some very non-linear code

- Managing the state of all those interdependent objects looks like a nightmare

- There is no easy function to just get an image buffer. You have to go through an Android

Surface, (anImageReaderspecifically), so you have to manage that object and its events too. I wonder if this introduces any latency or performance issues. It is one more point of failure for sure. - The bindings use multiple object types, none of which are wrapped nicely in the Rust Android NDK crate, meaning that I'd have to create my own wrappers using a lot of unsafe code

As a side note, OpenCV does have an Android video backend, so you can actually use the nice OpenCV interfaces.

Trying the stereo webcam with some apps

So turns out none of the above is relevant anyway, because when I tried out the Light cameras with some apps, only the RGB camera worked. The stereo cam either didn't show up as an option, it didn't show a pic, or straight up crashed the apps.

I assume the apps use the official API, because what else would they use.

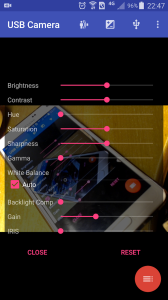

Except the one just called "USB Camera" that could actually read and display the pic. How does it do it?

Apparently, instead of going through the kernel driver and all the Android cruft they put

around it, it uses a bundled libuvc and communicates directly with the camera through libusb. This solution

was also mentioned by the stackoverflow answer I linked above.

Here's a similar project on github.

UVC

In the past I've already looked at the USB HID specs in detail, and at my previous job I had to go through some way more involved protocols (I even looked at some 3GPP stuff, but I will spare you that horror), so I jumped into the UVC specifications.

I have to say, these USB specs are pretty good. The docs are well written and easy to find, and the protocols themselves are very simple to implement if you only need basic functionality.

In this case, it turns out that all you have to do is bulk read the USB endpoint, and you get raw frames.

That it, no callbacks, no manager object, no ImageReader, just a synchronous libusb_bulk_transfer,

and you get a raw frame.

This is especially true with the stereo cam of the Nreal Light, because the USB stream is served by a custom firmware on an OV580 DSP, and it only has a single stream format, and practically no controls.

So I looked at the USB descriptor of the camera, issued the bulk read on the relevant endpoint, and...

LIBUSB_ERROR_TIMEOUT

Ugh.

Looking at libuvc

I guess I have no choice but to clone the libuvc repo and look at what I actually need to do.

After cloning, I cmake-d the project and tried out the example to verify that it actually works:

$ cd libuvc

$ mkdir build

$ cd build

$ cmake ..

$ make -j

$ ./example

UVC initialized

Device found

Device opened

DEVICE CONFIGURATION (05a9:0680/OV0001) ---

Status: idle

VideoControl:

bcdUVC: 0x0110

VideoStreaming(1):

bEndpointAddress: 129

Formats:

UncompressedFormat(1)

bits per pixel: 16

GUID: 5955593200001000800000aa00389b71 (YUY2)

default frame: 1

aspect ratio: 0x0

interlace flags: 00

copy protect: 00

FrameDescriptor(1)

capabilities: 00

size: 640x481

bit rate: 147763200-147763200

max frame size: 615680

default interval: 1/30

interval[0]: 1/30

END DEVICE CONFIGURATION

First format: (YUY2) 640x481 30fps

bmHint: 0001

bFormatIndex: 1

bFrameIndex: 1

dwFrameInterval: 333333

wKeyFrameRate: 0

wPFrameRate: 0

wCompQuality: 0

wCompWindowSize: 0

wDelay: 101

dwMaxVideoFrameSize: 615680

dwMaxPayloadTransferSize: 32768

bInterfaceNumber: 1

Streaming...

Enabling auto exposure ...

... enabled auto exposure

callback! frame_format = 3, width = 640, height = 481, length = 615680, ptr = 0x3039

callback! frame_format = 3, width = 640, height = 481, length = 615680, ptr = 0x3039

callback! frame_format = 3, width = 640, height = 481, length = 615680, ptr = 0x3039

callback! frame_format = 3, width = 640, height = 481, length = 615680, ptr = 0x3039

Cool, it seems to be working.

Now looking at the code, while the initialization is simple, it does some more than

just opening the fd, reading the descriptors and jumping right to the bulk read part.

It sends a kind of "start stream" packet: in the uvc_start_streaming,

before the uvc_stream_start function is called (which does the actual bulk reading stuff),

it calls uvc_stream_open_ctrl, which in turn calls uvc_stream_ctrl, to send a control

packet, containing the stream format requested from the device, which was previously filled

by querying the supported frame formats.

Since we know that the device only supports a single format that is unlikely to be changed, in our implementation we could fill this control structure out based on the specs, and the read descriptor.

Or we could just dump it from the libuvc example using gdb LMAO:

$ gdb example

(gdb) break uvc_stream_open_ctrl

(gdb) r

Thread 1 "example" hit Breakpoint 1, uvc_stream_open_ctrl (devh=0x555555578b80, strmhp=0x7fffffffd6b0, ctrl=0x7fffffffd720) at /tmp/libuvc/src/stream.c:1007

(gdb) break libusb_control_transfer

(gdb) c

Thread 1 "example" hit Breakpoint 2, 0x00007ffff7d75b10 in libusb_control_transfer () from /usr/lib/libusb-1.0.so.0

(gdb) bt

#0 0x00007ffff7d75b10 in libusb_control_transfer () from /usr/lib/libusb-1.0.so.0

#1 0x00007ffff7fb98b0 in uvc_query_stream_ctrl (devh=0x555555578b80, ctrl=0x7fffffffd720, probe=0 '\000', req=UVC_SET_CUR) at /tmp/libuvc/src/stream.c:235

#2 0x00007ffff7fb9e5b in uvc_stream_ctrl (strmh=0x5555555a2500, ctrl=0x7fffffffd720) at /tmp/libuvc/src/stream.c:402

#3 0x00007ffff7fbaf9f in uvc_stream_open_ctrl (devh=0x555555578b80, strmhp=0x7fffffffd6b0, ctrl=0x7fffffffd720) at /tmp/libuvc/src/stream.c:1037

#4 0x00007ffff7fbad74 in uvc_start_streaming (devh=0x555555578b80, ctrl=0x7fffffffd720, cb=0x555555555279 <cb>, user_ptr=0x3039, flags=0 '\000') at /tmp/libuvc/src/stream.c:941

#5 0x0000555555555610 in main (argc=1, argv=0x7fffffffd868) at /tmp/libuvc/src/example.c:174

(gdb) frame 1

(gdb) p/x buf

$1 = {0x1, 0x0, 0x1, 0x1, 0x15, 0x16, 0x5, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x65, 0x0, 0x0, 0x65, 0x9, 0x0, 0x0, 0x80, 0x0, 0x0, 0x80, 0xd1, 0xf0, 0x8, 0x8, 0xf0, 0x69, 0x38}

Test implementation

I put the setup packet in my test python "implementation":

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | |

And I got my frames.

Now all that was left to do is remove the 12 byte frame header that's added every 0x8000 bytes (as indicated in the frame format descriptor), and we got our sweet 640x481 YUYV raw frames. I leave this as an exercise to the reader.

Oh, and it worked out of the box on Android too.

(Note: the frames are not actually YUY2, that's a lie, it's actually 640x2x480 grayscale, plus a fake last line that contains some metadata, importantly the IMU timestamp. But this is a really Nreal Light-specific quirk)

The final code in Rust can be seen here, along with an example.

Conclusion

Implementing your own USB device classes is good fun if you do it for very specific hardware. Can recommend.

IMU prediction 2: Double Exponential Boogaloo

New site design

If you need Augmented Reality problem solving, or want help implementing an AR or VR idea, drop us a mail at info@voidcomputing.hu