Rip and tear

Still field testing, still not satisfied with the image shake. IMU prediction helps a lot, but it is very inconsistent: sometimes it is rock stable, sometimes it is lagging quite a bit (in the delay sense).

I've also noticed the definite lack of tearing, even though I'm using Immediate presentation mode, which should be low latency, but should also cause tearing.

The lack of tearing means I still have unnecessary latency...

The issue

Obviously, we need a way to test tearing. With normal rendering, it's hard to see, because the lines are soft, and the movements are not very aggressive.

So we just have to do the opposite: render a bright line that moves back and forth through the whole screen every 150ms or so.

It's actually hard to create a picture of it for the blog: screenshots are made from the backing buffer, which are not "torn", and if I photo it, the tearing will be from the camera's shutter effects. It can be done with long exposure, thankfully:

This is a combination of multiple frames and you can see the distinct lack of tearing.

Presentation modes, swapchain and buffering

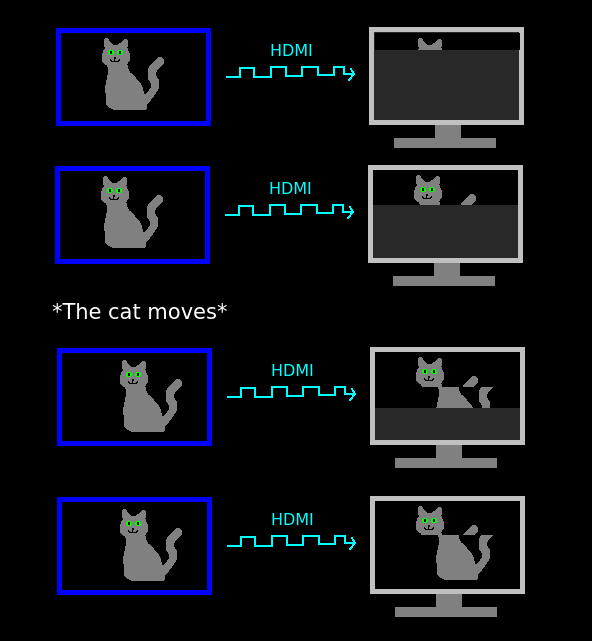

Just like the old times, image data is sent to the display in lines, usually from top to bottom. If you update the currently displayed buffer while the GPU is sending it, half the image will be from the old frame, and half from the new one, causing tearing.

To combat this, we used to do "double buffering": one buffer is the displayed buffer, the other is the one where we do our updates and renders. The display buffer is not touched while it's being sent to the monitor. When the frame was sent, and a new buffer is ready (rendered), the GPU swaps ("flips", "presents") the two buffers in a very cheap pointer operation, and starts sending that one.

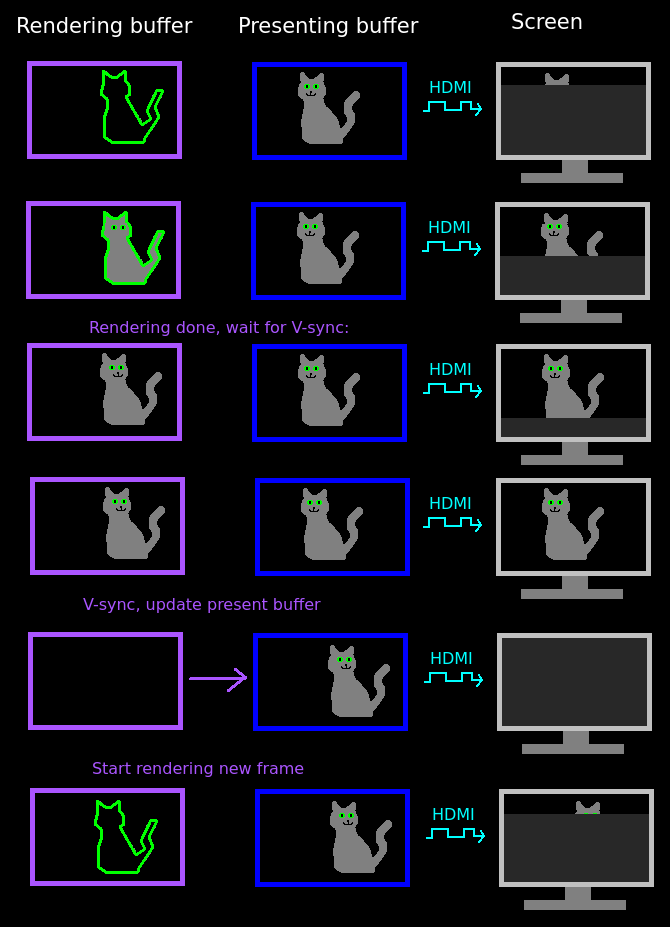

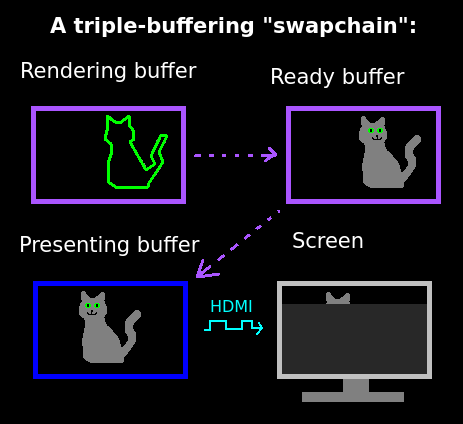

I say "used to", because the most common buffering method today is "triple buffering", which involves three buffers: one that is being displayed, one that is ready to display, and one that's still rendering. This is done so that operations can be done in parallel, and even if the application has a small hiccup, it will be smoothed out and you don't get jitter. If the application rendered two full frames, and one is still displaying, the presentation engine will block (backpressure) it.

The GPU driver gives you the choice: if you want you can have tearing but also very low latency (with "Immediate" presentation mode), or you can have triple buffering (called "FIFO" mode, or "Vsync Enabled"). There is a newer mode, called "Mailbox", which is almost like FIFO, but if there are multiple ready frames, all but the most recent one is dropped. This way you can always have relatively fresh frames without tearing at the cost of some wasted rendering.

I will not talk about GSync and friends here, but I do suggest checking out how those work.

Composition

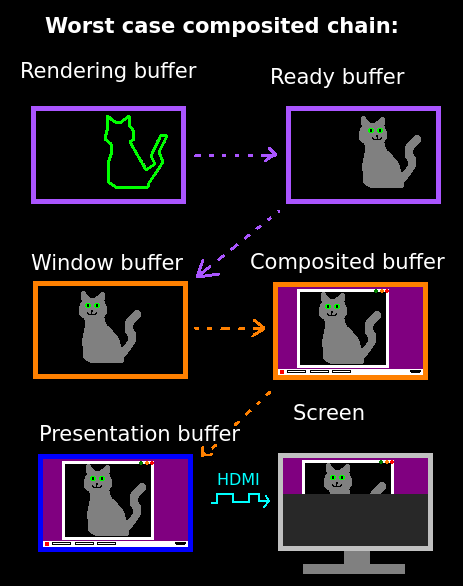

Windowing systems have a component called the "compositor", which takes all the rendered contents of the individual windows, and puts them on the screen with pretty decorations and shadows and whatnot.

They do this even if the window content was rendered with Vulkan, OpenGL, DirectX, etc. How? Well, when you render to your 3D surface, you don't actually render directly to the GPU frame buffers, You render to a sandbox buffer given to you by the compositor. Then the compositor will put that buffer on the screen when it feels like it, usually with a tear-free (i.e. buffered) presentation method.

This of course adds 1-2 additional frames of delay to the rendering:

Turns out, KDE's KWin leaves composition on even for full screen windows,

even if marked as Exclusive

access in winit. GNOME is smarter and enables passthrough, or unredirect

or something like that.

To fix this, I can disable compositing by:

- Disabling it in system settings

- Pressing alt-shift-F12 (doesn't work for me)

- Using a KWin script

- Setting up a custom KWin rule

- Painfully integrating

_NET_WM_BYPASS_COMPOSITORintowinit, because this is the official method according to the KDE guys, because unredirecting is apparently hard. (See the "Removal of unredirect fullscreen windows" heading in this post)

While 5. is the real solution, I went with 4. for now.

Worth mentioning that there is the VK_KHR_display vulkan extension that's

supported by my laptops intel graphics card, which would let me directly

pull the screen out of X's hands and create a rendering surface on it. This

would also be nice, but would also mean messing around a lot with both ash

and wgpu, and I don't really have the

energy for that.

Tearing

So now I finally had tearing:

This is good and bad news.

The good news is that we no longer have unnecessary buffers, and the input-to-photon latency got reduced to 20ms (from 45). This all but eliminated the screen shake you can see even in our latest demo video.

The bad news is we have tearing, which can be a problem.

GPU <-> CPU sync

How to solve tearing without adding back the buffers and latency?

With Immediate mode and precise timing. All we have to do is finish rendering just before the GPU flips the buffers and starts sending the pixels.

Sounds easy enough, but on linux it is not. There are a lot of solutions, but none of them are portable or easy. In fact, this has been a problem for the last ~30 years, and the main culprit is of course X.Org (a.k.a. X11).

There is literally no standard way to know when (in CPU time) the next flip will occur, or when the last one did.

There are some non-standard ones at least:

- The

VK_EXT_display_controlvulkan extension has a function calledvkRegisterDisplayEventEXT, with which you can set up a fence (fancy semaphore) that will be signaled when the flip occurs - The

VK_GOOGLE_display_timingvulkan extension for android that has all kinds of nice statistics and sounds overall pleasant to use - Maybe the

PresentCompleteNotifyextended notification in X11? - GLX also has multiple different extensions for this.

- I also found some code in the rokid driver where it gets back a timestamp of the last actual VSync it had on its own device. Unfortunately my glasses don't send that event

- And the best for last: apparently the Oculus Rift guys send back the contents of the first few pixels along with the sensor data. The rendering side sets those to a kind of serial number, and when getting the sensor data back, they get the exact full-system delay.

Of course the Valve had a way more detailed presentation back in 2015 about this exact problem.

Consistency

Another issue with no synchronization is that I can't really know how much to predict forward. Fortunately this is not a big issue currently, because I'm rendering at 150+ FPS (and displaying at 60), and at this point the transmission delays seem like a main component (16ms) while rendering time is below 4ms. So even if that varies, it doesn't really change the prediction interval.

I did end up putting in some compensation for the rendering time though: e.g. if it is 8ms for some reason (e.g. the GPU slowed down due to heat), I apply a small correction multiplier to the predicted additional rotation.

Conclusion

In the end, I just left the tearing alone, it doesn't look that bad. In fact, it looks kind-of cyberpunk-ish in this context:

Thank god I'm doing low fidelity AR that can be rendered on basically a Geforce 3, and not some high-end VR stuff. The worst that can happen is that it looks too holographic, but people won't get dizzy, because they see the real world around them.

Stereo-vision calibration

Rally pace note generation

If you need Augmented Reality problem solving, or want help implementing an AR or VR idea, drop us a mail at info@voidcomputing.hu