I haven't posted for like... 1.5 years, so it's high time I write

about something useful or entertaining. Well, this post is not going

to be that, and instead I will be talking about an obscure piece of

code written in a dead language.

Click to read more

(2537 words)

We've found a drop-in replacement for the Nreal Light, called

the Grawoow G530 (or Metavision M53, and who knows how many other

names), so we finally have 3 more protocols to write about in this blog.

Click to read more

(2463 words)

I felt a need to apologize about the site looking amateurish in a client call for

the second time in a week, and it was kind of a red flag. We don't want to have a

bland, cookie cutter 2020s company info page, but we do need to make it less outdated,

while keeping the aesthetics.

Click to read more

(975 words)

It was time to integrate support for the Nreal Light's stereo camera into our

Android build, but our go-to library (nokhwa)

does not work on Android. In fact, nothing seemed to support this specific camera

on Android... So it was time to make our own USB Video Device Class implementation

(but not really).

Click to read more

(1434 words)

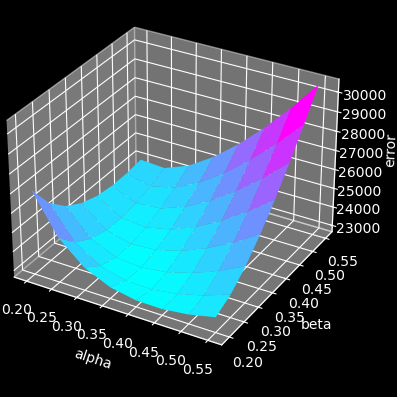

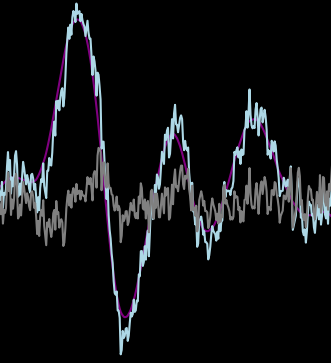

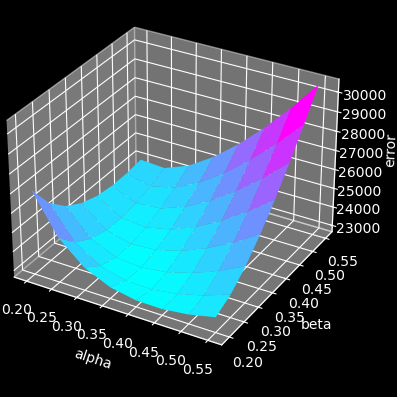

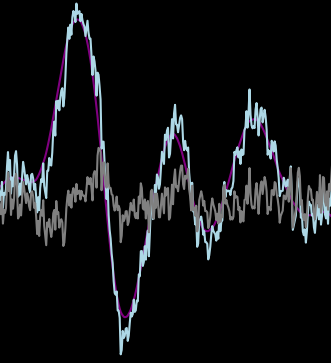

The whole FIR thing has served us well for the last

few months, but some new developments has forced us to look into alternative

prediction algorithms. Thankfully (?) I'm on many Discord channels, and someone

mentioned Double Exponential Smoothing on one of them. Everyone loves a good

clickbait, so you won't believe what happened in the end...

Click to read more

(2235 words)

We are experimenting with various VSLAM algorithms, and a big part

of those is always "bundle optimization". While it is just spicy

Least Squares with sparse matrices, I couldn't find a well-maintained Rust

library to do this. I, however, found g2o, a c++ library which is both

very well structured, easy to use, and flexible. The only problem is

that it's not Rust, it's C++...

Click to read more

(2437 words)

I've just finished implementing the third completely different

USB protocol for my open-source AR glasses drivers project,

so it's time to summarize my findings, and drop some fun facts

on you in the process.

Click to read more

(3529 words)

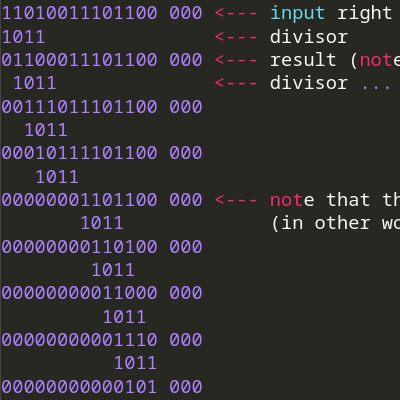

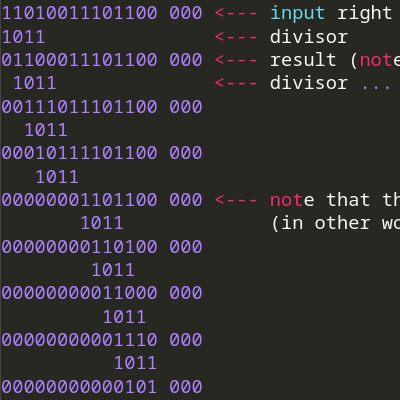

It's been a long time since I've been to any security CTFs,

even longer time since I've written my last writeup.

But, as life would have it, I came across a task during my AR

adventures that was exactly like a CTF task:

I've found a non-standard CRC checksum on a firmware file, and

had to reverse-engineer its parameters.

Click to read more

(2419 words)

One of the less fun parts of trying to run a company is having

to create all kinds of marketing materials, such as product and

investor slides.

I already use reveal.js for all my

slideshow needs, so there's one thing that will make the job

bearable:

A scrolling synthwave-style background made with HTML+CSS.

Click to read more

(1559 words)

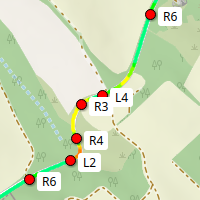

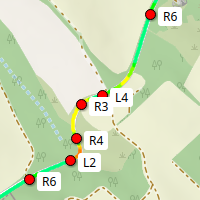

I always wanted to do a rally-style demo

for the Augmented Reality glasses, but first I'll need actual pace

notes to display.

And there's no way I'm doing that manually.

Click to read more

(1746 words)

Still field testing, still not satisfied with the image shake.

IMU prediction helps a lot, but

it is very inconsistent: sometimes it is rock stable, sometimes

it is lagging quite a bit (in the delay sense).

I've also noticed the definite lack of tearing, even though I'm

using Immediate

presentation mode, which should be low latency, but should also

cause tearing.

The lack of tearing means I still have unnecessary latency...

Click to read more

(1304 words)

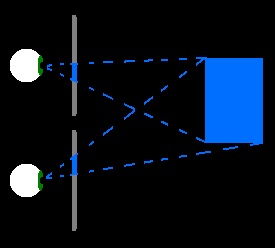

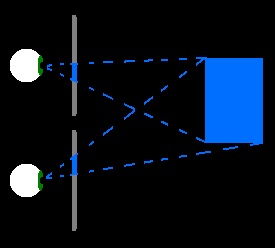

I've noticed a weird distortion during field-testing the new

graphics on the AR glasses: signs that should have been far

away looked way too close, but they got further as I approached

them. Pupil distance didn't seem to fix it without introducing

other issues.

So I've decided to finally properly calibrate the projection

parameters.

Content warning:

All drawings are really badly made

with KolourPaint (KDE's version of MSPAINT).

Click to read more

(1963 words)

The main problem I have with the current AR glasses is that

it has a motion-to-photon latency of around 35ms. I don't

think this can be any lower, as this is 2 frames, and I'm

pretty sure the GPU->DP->Oled_Driver->OLED chain takes

this long.

So it's time to finally implement proper lag compensation

in software.

Click to read more

(1309 words)

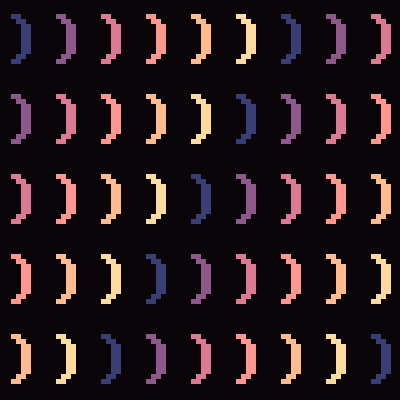

You can probably see that the logo at the top left is animated. At least if your

browser decided to cooperate. This was done by using a .webm (or .mp4) video

instead of just a regular image.

But how was the logo made? The answer is not "with an image/video editing software

a normal person would use"

Click to read more

(1766 words)